When I started writing Think Stats, I wanted to avoid dire warnings about all the things people do wrong with statistics. I enjoy feeling smug and pointing out other people's mistakes as much as the next statistics Nazi, but I don't see any evidence that the warnings have much effect on the quality of statistical analysis in the press.

Maybe another approach is in order. Instead of making statistics seems like an arcane art that can only be practiced correctly by trained professionals, I would like to emphasize that the majority of statistical analysis is very simple. Deep mathematics is seldom necessary; usually it is enough to ask good questions and apply simple techniques.

As an example, I'm going to do what I said I wouldn't: point out other people's mistakes. Here is an excerpt from a recent ASEE newsletter, Connections:

The paragraph tries to summarize the data in the table, and fails. Let's take it point by point:

Claim 1) The percentages of recipients of doctoral degrees from all engineering disciplines by race and ethnicity show a great deal of stability over the last ten years.

Validity: BASICALLY TRUE. If they had just stopped here, everything would be fine. A graph would make this conclusion easier to see. I copied their data into Google Docs and generated this graph:

Yup. Pretty flat.

The other thing that jumps out of this graph is that something funny happened in 2010. The caption in the article explains, "New race and ethnicity categories, first reported in 2010 ... are combined under “other”. This change in the survey seems to have caused a decrease in the number of respondents reporting "other", and an increase in "Causasian." I can't explain why it had that effect, but it is not surprising that it had an effect.

Claim 2) African Americans, as a percentage of total of all recipients of doctoral degrees grew about half a percent from 2001 to 2010;

Validity: FALSE. Because the survey changed in 2010, it is not a good idea to summarize the results by comparing the first and last data points. If we drop 2010, there is no evidence of any meaningful change in the percentage of African Americans.

Claim 3) Hispanics increased by about two percent during the same time period;

Validity: FALSE. Again, if we ignore 2010, there is no evidence of change.

Claim 4) Asian Americans stayed virtually unchanged;

Validity: MAYBE. If anything, there is a small decrease. Again ignoring 2010, the last three data points are all below the previous six. But if you fit a trend line, the slope is not statistically significant.

Claim 5) Caucasians increased by percent.

Validity: FALSE. If we ignore 2010, there is a clear downward trend. If you fit a trend line, the slope is about -0.6 percentage points per year, and the p-value is 0.003.

Claim 6) No comment on "Other"

Validity: ERROR OF OMISSION. There is a clear upward trend, with or without the last data point. The fitted slope is almost 0.9 percentage points per year, and the p-value is 0.005.

So let's summarize:

Race Article claims Actually

---- -------------- --------

African American +0.5 %age point No change

Hispanic +2 %age point No change

Asian American No change Maybe down

Caucasian Up -4 %age point

Other No comment +6 %age point

What's the point of this? Granted, a newsletter from ASEE is not the Proceedings of the National Academy of Sciences, so maybe I should't pick on it. But it makes a nice example of simple statistics gone wrong. I guess that makes me a statistics Nazi after all.

Here's one more lesson: if you run a survey every year, avoid changing the questions, or even the selection of responses. It is almost impossible to do time series analysis across different versions of a question.

If you read this far, here's a small reward. The electronic edition of Think Stats is on sale now at 50% off, which makes it $8.49. Click here to get the deal.

Wednesday, February 29, 2012

Friday, February 24, 2012

Self-organized criticality and holistic models

My new book, Think Complexity, will be published by O'Reilly Media in March. For people who can't stand to wait that long, I am publishing excerpts here. If you really can't wait, you can read the free version at thinkcomplex.com.

In Part One I outline the topics in Think Complexity and contrasted a classical physical model of planetary orbits with an example from complexity science: Schelling's model of racial segregation.

In Part Two I outline some of the ways complexity differs from classical science. In Part Three, I describe differences in the ways complex models are used, and their effects in engineering and (of all things) epistemology.

Part Four pulls together discussions from two chapters: the Watts-Strogatz model of small world graphs, and the Barabasi-Albert model of scale free networks. And now, Part Five: Self-organized criticality.

Sand piles

In 1987 Bak, Tang and Wiesenfeld published a paper in Physical Review Letters, ``Self-organized criticality: an explanation of 1/f noise.'' You can download it from http://prl.aps.org/abstract/PRL/v59/i4/p381_1.

The title takes some explaining. A system is ``critical'' if it is in transition between two phases; for example, water at its freezing point is a critical system. A variety of critical systems demonstrate common behaviors:

Critical systems are usually unstable. For example, to keep water in a partially frozen state requires active control of the temperature. If the system is near the critical temperature, a small deviation tends to move the system into one phase or the other.

Many natural systems exhibit characteristic behaviors of criticality, but if critical points are unstable, they should not be common in nature. This is the puzzle Bak, Tang and Wiesenfeld address. Their solution is called self-organized criticality (SOC), where ``self-organized'' means that from any initial condition, the system tends to move toward a critical state, and stay there, without external control.

As an example, they propose a model of a sand pile. The model is not realistic, but it has become the standard example of self-organized criticality.

The model is a 2-D cellular automaton where the state of each cell represents the slope of a part of a sand pile. During each time step, each cell is checked to see whether it exceeds some critical value. If so, an ``avalanche'' occurs that transfers sand to neighboring cells; specifically, the cell's slope is decreased by 4, and each of the 4 neighbors is increased by 1. At the perimeter of the grid, all cells are kept at zero slope, so (in some sense) the excess spills over the edge.

Bak et al. let the system run until it is stable, then observe the effect of small perturbations; they choose a cell at random, increment its value by 1, and evolve the system, again, until it stabilizes.

For each perturbation, they measure the total number of cells that are affected by the resulting avalanche. Most of the time it is small, usually 1. But occasionally a large avalanche affects a substantial fraction

of the grid. The distribution of turns out to be long-tailed, which supports the claim that the system is in a critical state.

[Think Complexity presents the details of this model and tests for long-tailed distributions, fractal geometry, and 1/f noise. For this excerpt, I'll skip to the discussion at the end of the chapter.]

Reductionism and Holism

The original paper by Bak, Tang and Wiesenfeld is one of the most frequently-cited papers in the last few decades. Many new systems have been shown to be self-organized critical, and the sand-pile model, in particular, has been studied in detail.

As it turns out, the sand-pile model is not a very good model of a sand pile. Sand is dense and not very sticky, so momentum has a non-negligible effect on the behavior of avalanches. As a result, there are fewer very large and very small avalanches than the model predicts, and the distribution is not long tailed.

Bak has suggested that this observation misses the point. The sand pile model is not meant to be a realistic model of a sand pile; it is meant to be a simple example of a broad category of models.

To understand this point, it is useful to think about two kinds of models, reductionist and holistic. A reductionist model describes a system by describing its parts and their interactions. When a reductionist model is used as an explanation, it depends on an analogy between the components of the model and the components of the system.

For example, to explain why the ideal gas law holds, we can model the molecules that make up a gas with point masses, and model their interactions as elastic collisions. If you simulate or analyze this model, you find that it obeys the ideal gas law. This model is satisfactory to the degree that molecules in a gas behave like molecules in the model. The analogy is between the parts of the system and the parts of the model.

Holistic models are more focused on similarities between systems and less interested in analogous parts. A holistic approach to modeling often consists of two steps, not necessarily in this order:

1. Identify a kind of behavior that appears in a variety of systems.

2. Find the simplest model that demonstrates that behavior.

For example, in The Selfish Gene, Richard Dawkins suggests that genetic evolution is just one example of an evolutionary system. He identifies the essential elements of the category---discrete replicators, variability and differential reproduction---and proposes that any system that has these elements displays similar behavior, including complexity without design. As another example of an evolutionary system, he proposes memes, which are thoughts or behaviors that are ``replicated'' by transmission from person to person. As memes compete for the resource of human attention, they evolve in ways that are similar to genetic evolution.

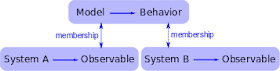

Critics of memetics have pointed out that memes are a poor analogy for genes. Memes differ from genes in many obvious ways. But Dawkins has argued that these differences are beside the point because memes are not supposed to be analogous to genes. Rather, memetics and genetics are examples of the same category---evolutionary systems. The differences between them emphasize the real point, which is that evolution is a general model that applies to many seemingly disparate systems. The logical structure of this argument is shown in this diagram:

Bak has made a similar argument that self-organized criticality is a general model for a broad category of systems. According to Wikipedia, ``SOC is typically observed in slowly-driven non-equilibrium systems with extended degrees of freedom and a high level of nonlinearity.''

Many natural systems demonstrate behaviors characteristic of critical systems. Bak's explanation for this prevalence is that these systems are examples of the broad category of self-organized criticality. There are two ways to support this argument. One is to build a realistic model of a particular system and show that the model exhibits SOC. The second is to show that SOC is a feature of many diverse models, and to identify the essential characteristics those models have in common.

The first approach, which I characterize as reductionist, can explain the behavior of a particular system. The second, holistic, approach, explains the prevalence of criticality in natural systems. They are different models with different purposes.

For reductionist models, realism is the primary virtue, and simplicity is secondary. For holistic models, it is the other way around.

I am using "reductionism" and "holism" here is a descriptive sense, not as technical labels for these models. For more general discussion of these terms, see http://en.wikipedia.org/wiki/Reductionism and http://en.wikipedia.org/wiki/Holism.

SOC, causation and prediction

If a stock market index drops by a fraction of a percent in a day, there is no need for an explanation. But if it drops 10, people want to know why. Pundits on television are willing to offer explanations, but the real answer may be that there is no explanation.

Day-to-day variability in the stock market shows evidence of criticality: the distribution of value changes is long-tailed and the time series exhibits noise. If the stock market is a self-organized critical system, we should expect occasional large changes as part of the ordinary behavior of the market.

The distribution of earthquake sizes is also long-tailed, and there are simple models of the dynamics of geological faults that might explain this behavior. If these models are right, they imply that large earthquakes are unexceptional; that is, they do not require explanation any more than small earthquakes do.

Similarly, Charles Perrow has suggested that failures in large engineered systems, like nuclear power plants, are like avalanches in the sand pile model. Most failures are small, isolated and harmless, but occasionally a coincidence of bad fortune yields a catastrophe. When big accidents occur, investigators go looking for the cause, but if Perrow's ``normal accident theory'' is correct, there may be no cause.

These conclusions are not comforting. Among other things, they imply that large earthquakes and some kinds of accidents are fundamentally unpredictable. It is impossible to look at the state of a critical system and say whether a large avalanche is ``due.'' If the system is in a critical state, then a large avalanche is always possible. It just depends on the next grain of sand.

In a sand-pile model, what is the cause of a large avalanche? Philosophers sometimes distinguish the proximate cause, which is most immediately responsible, from the ultimate cause, which is, for whatever reason, considered the true cause.

In the sand-pile model, the proximate cause of an avalanche is a grain of sand, but the grain that causes a large avalanche is identical to any other grain, so it offers no special explanation. The ultimate cause of a large avalanche is the structure and dynamics of the systems as a whole: large avalanches occur because they are a property of the system.

Many social phenomena, including wars, revolutions, epidemics, inventions and terrorist attacks, are characterized by long-tailed distributions. If the reason for these distributions is that social systems are critical, that suggests that major historical events may be fundamentally unpredictable and unexplainable.

Questions

[Think Complexity can be used as a textbook, so it includes exercises and topics for class discussion. Here are some ideas for discussion and further reading.]

1. In a 1996 paper in Nature, Frette et al report the results of experiments with rice piles (http://www.nature.com/nature/journal/v379/n6560/abs/379049a0.html). They find that some kinds of rice yield evidence of critical behavior, but others do not.

Similarly, Pruessner and Jensen studied large-scale versions of the forest fire model (using an algorithm similar to Newman and Ziff's). In their 2004 paper, ``Efficient algorithm for the forest fire model,'' they present evidence that the system is not critical after all (http://pre.aps.org/abstract/PRE/v70/i6/e066707). How do these results bear on Bak's claim that SOC explains the prevalence of critical phenomena in nature?

2. In The Fractal Geometry of Nature, Benoit Mandelbrot proposes what he calls a ``heretical'' explanation for the prevalence of long-tailed distributions in natural systems (page 344). It may not be, as Bak suggests, that many systems can generate this behavior in isolation. Instead there may be only a few, but there may be interactions between systems that cause the behavior to propagate.

To support this argument, Mandelbrot points out:

What do you think of this argument? Would you characterize it as reductionist or holist?

3. Read about the ``Great Man'' theory of history at http://en.wikipedia.org/wiki/Great_man_theory. What implication does self-organized criticality have for this theory?

In Part One I outline the topics in Think Complexity and contrasted a classical physical model of planetary orbits with an example from complexity science: Schelling's model of racial segregation.

In Part Two I outline some of the ways complexity differs from classical science. In Part Three, I describe differences in the ways complex models are used, and their effects in engineering and (of all things) epistemology.

Part Four pulls together discussions from two chapters: the Watts-Strogatz model of small world graphs, and the Barabasi-Albert model of scale free networks. And now, Part Five: Self-organized criticality.

Sand piles

In 1987 Bak, Tang and Wiesenfeld published a paper in Physical Review Letters, ``Self-organized criticality: an explanation of 1/f noise.'' You can download it from http://prl.aps.org/abstract/PRL/v59/i4/p381_1.

The title takes some explaining. A system is ``critical'' if it is in transition between two phases; for example, water at its freezing point is a critical system. A variety of critical systems demonstrate common behaviors:

- Long-tailed distributions of some physical quantities: for example, in freezing water the distribution of crystal sizes is characterized by a power law.

- Fractal geometries: freezing water tends to form fractal patterns---the canonical example is a snowflake. Fractals are characterized by self-similarity; that is, parts of the pattern resemble scaled copies of the whole.

- Variations in time that exhibit pink noise: what we call ``noise'' is a time series with many frequency components. In ``white'' noise, all of the components have equal power. In ``pink'' noise, low-frequency components have more power than high-frequency components. Specifically, the power at frequency f is proportional to 1/f. Visible light with this power spectrum looks pink, hence the name.

Critical systems are usually unstable. For example, to keep water in a partially frozen state requires active control of the temperature. If the system is near the critical temperature, a small deviation tends to move the system into one phase or the other.

Many natural systems exhibit characteristic behaviors of criticality, but if critical points are unstable, they should not be common in nature. This is the puzzle Bak, Tang and Wiesenfeld address. Their solution is called self-organized criticality (SOC), where ``self-organized'' means that from any initial condition, the system tends to move toward a critical state, and stay there, without external control.

As an example, they propose a model of a sand pile. The model is not realistic, but it has become the standard example of self-organized criticality.

The model is a 2-D cellular automaton where the state of each cell represents the slope of a part of a sand pile. During each time step, each cell is checked to see whether it exceeds some critical value. If so, an ``avalanche'' occurs that transfers sand to neighboring cells; specifically, the cell's slope is decreased by 4, and each of the 4 neighbors is increased by 1. At the perimeter of the grid, all cells are kept at zero slope, so (in some sense) the excess spills over the edge.

Bak et al. let the system run until it is stable, then observe the effect of small perturbations; they choose a cell at random, increment its value by 1, and evolve the system, again, until it stabilizes.

For each perturbation, they measure the total number of cells that are affected by the resulting avalanche. Most of the time it is small, usually 1. But occasionally a large avalanche affects a substantial fraction

of the grid. The distribution of turns out to be long-tailed, which supports the claim that the system is in a critical state.

[Think Complexity presents the details of this model and tests for long-tailed distributions, fractal geometry, and 1/f noise. For this excerpt, I'll skip to the discussion at the end of the chapter.]

Reductionism and Holism

The original paper by Bak, Tang and Wiesenfeld is one of the most frequently-cited papers in the last few decades. Many new systems have been shown to be self-organized critical, and the sand-pile model, in particular, has been studied in detail.

As it turns out, the sand-pile model is not a very good model of a sand pile. Sand is dense and not very sticky, so momentum has a non-negligible effect on the behavior of avalanches. As a result, there are fewer very large and very small avalanches than the model predicts, and the distribution is not long tailed.

Bak has suggested that this observation misses the point. The sand pile model is not meant to be a realistic model of a sand pile; it is meant to be a simple example of a broad category of models.

To understand this point, it is useful to think about two kinds of models, reductionist and holistic. A reductionist model describes a system by describing its parts and their interactions. When a reductionist model is used as an explanation, it depends on an analogy between the components of the model and the components of the system.

For example, to explain why the ideal gas law holds, we can model the molecules that make up a gas with point masses, and model their interactions as elastic collisions. If you simulate or analyze this model, you find that it obeys the ideal gas law. This model is satisfactory to the degree that molecules in a gas behave like molecules in the model. The analogy is between the parts of the system and the parts of the model.

Holistic models are more focused on similarities between systems and less interested in analogous parts. A holistic approach to modeling often consists of two steps, not necessarily in this order:

1. Identify a kind of behavior that appears in a variety of systems.

2. Find the simplest model that demonstrates that behavior.

For example, in The Selfish Gene, Richard Dawkins suggests that genetic evolution is just one example of an evolutionary system. He identifies the essential elements of the category---discrete replicators, variability and differential reproduction---and proposes that any system that has these elements displays similar behavior, including complexity without design. As another example of an evolutionary system, he proposes memes, which are thoughts or behaviors that are ``replicated'' by transmission from person to person. As memes compete for the resource of human attention, they evolve in ways that are similar to genetic evolution.

Critics of memetics have pointed out that memes are a poor analogy for genes. Memes differ from genes in many obvious ways. But Dawkins has argued that these differences are beside the point because memes are not supposed to be analogous to genes. Rather, memetics and genetics are examples of the same category---evolutionary systems. The differences between them emphasize the real point, which is that evolution is a general model that applies to many seemingly disparate systems. The logical structure of this argument is shown in this diagram:

Bak has made a similar argument that self-organized criticality is a general model for a broad category of systems. According to Wikipedia, ``SOC is typically observed in slowly-driven non-equilibrium systems with extended degrees of freedom and a high level of nonlinearity.''

Many natural systems demonstrate behaviors characteristic of critical systems. Bak's explanation for this prevalence is that these systems are examples of the broad category of self-organized criticality. There are two ways to support this argument. One is to build a realistic model of a particular system and show that the model exhibits SOC. The second is to show that SOC is a feature of many diverse models, and to identify the essential characteristics those models have in common.

The first approach, which I characterize as reductionist, can explain the behavior of a particular system. The second, holistic, approach, explains the prevalence of criticality in natural systems. They are different models with different purposes.

For reductionist models, realism is the primary virtue, and simplicity is secondary. For holistic models, it is the other way around.

I am using "reductionism" and "holism" here is a descriptive sense, not as technical labels for these models. For more general discussion of these terms, see http://en.wikipedia.org/wiki/Reductionism and http://en.wikipedia.org/wiki/Holism.

SOC, causation and prediction

If a stock market index drops by a fraction of a percent in a day, there is no need for an explanation. But if it drops 10, people want to know why. Pundits on television are willing to offer explanations, but the real answer may be that there is no explanation.

Day-to-day variability in the stock market shows evidence of criticality: the distribution of value changes is long-tailed and the time series exhibits noise. If the stock market is a self-organized critical system, we should expect occasional large changes as part of the ordinary behavior of the market.

The distribution of earthquake sizes is also long-tailed, and there are simple models of the dynamics of geological faults that might explain this behavior. If these models are right, they imply that large earthquakes are unexceptional; that is, they do not require explanation any more than small earthquakes do.

Similarly, Charles Perrow has suggested that failures in large engineered systems, like nuclear power plants, are like avalanches in the sand pile model. Most failures are small, isolated and harmless, but occasionally a coincidence of bad fortune yields a catastrophe. When big accidents occur, investigators go looking for the cause, but if Perrow's ``normal accident theory'' is correct, there may be no cause.

These conclusions are not comforting. Among other things, they imply that large earthquakes and some kinds of accidents are fundamentally unpredictable. It is impossible to look at the state of a critical system and say whether a large avalanche is ``due.'' If the system is in a critical state, then a large avalanche is always possible. It just depends on the next grain of sand.

In a sand-pile model, what is the cause of a large avalanche? Philosophers sometimes distinguish the proximate cause, which is most immediately responsible, from the ultimate cause, which is, for whatever reason, considered the true cause.

In the sand-pile model, the proximate cause of an avalanche is a grain of sand, but the grain that causes a large avalanche is identical to any other grain, so it offers no special explanation. The ultimate cause of a large avalanche is the structure and dynamics of the systems as a whole: large avalanches occur because they are a property of the system.

Many social phenomena, including wars, revolutions, epidemics, inventions and terrorist attacks, are characterized by long-tailed distributions. If the reason for these distributions is that social systems are critical, that suggests that major historical events may be fundamentally unpredictable and unexplainable.

Questions

[Think Complexity can be used as a textbook, so it includes exercises and topics for class discussion. Here are some ideas for discussion and further reading.]

1. In a 1996 paper in Nature, Frette et al report the results of experiments with rice piles (http://www.nature.com/nature/journal/v379/n6560/abs/379049a0.html). They find that some kinds of rice yield evidence of critical behavior, but others do not.

Similarly, Pruessner and Jensen studied large-scale versions of the forest fire model (using an algorithm similar to Newman and Ziff's). In their 2004 paper, ``Efficient algorithm for the forest fire model,'' they present evidence that the system is not critical after all (http://pre.aps.org/abstract/PRE/v70/i6/e066707). How do these results bear on Bak's claim that SOC explains the prevalence of critical phenomena in nature?

2. In The Fractal Geometry of Nature, Benoit Mandelbrot proposes what he calls a ``heretical'' explanation for the prevalence of long-tailed distributions in natural systems (page 344). It may not be, as Bak suggests, that many systems can generate this behavior in isolation. Instead there may be only a few, but there may be interactions between systems that cause the behavior to propagate.

To support this argument, Mandelbrot points out:

- The distribution of observed data is often ``the joint effect of a fixed underlying 'true distribution' and a highly variable 'filter.'''

- Long-tailed distributions are robust to filtering; that is, ``a wide variety of filters leave their asymptotic behavior unchanged.''

What do you think of this argument? Would you characterize it as reductionist or holist?

3. Read about the ``Great Man'' theory of history at http://en.wikipedia.org/wiki/Great_man_theory. What implication does self-organized criticality have for this theory?

Tuesday, February 14, 2012

Think Complexity: Part Four

My new book, Think Complexity, will be published by O'Reilly Media in March. For people who can't stand to wait that long, I am publishing excerpts here. If you really can't wait, you can read the free version at thinkcomplex.com.

And we need a blurb. Think Complexity goes to press soon and we have a space on the back cover for a couple of endorsements. If you like the book and have something quotable to say about it, let me know. Thanks!

In Part One I outline the topics in Think Complexity and contrasted a classical physical model of planetary orbits with an example from complexity science: Schelling's model of racial segregation.

In Part Two I outline some of the ways complexity differs from classical science. In Part Three, I describe differences in the ways complex models are used, and their effects in engineering and (of all things) epistemology.

In this installment, I pull together discussions from two chapters: the Watts-Strogatz model of small world graphs, and the Barabasi-Albert model of scale free networks. But it all starts with Stanley Milgram.

Stanley Milgram

Stanley Milgram was an American social psychologist who conducted two of the most famous experiments in social science, the Milgram experiment, which studied people's obedience to authority (http://en.wikipedia.org/wiki/Milgram_experiment) and the Small World Experiment (http://en.wikipedia.org/wiki/Small_world_phenomenon), which studied the structure of social networks.

In the Small World Experiment, Milgram sent a package to several randomly-chosen people in Wichita, Kansas, with instructions asking them to forward an enclosed letter to a target person, identified by name and occupation, in Sharon, Massachusetts (which is the town near Boston where I grew up). The subjects were told that they could mail the letter directly to the target person only if they knew him personally; otherwise they were instructed to send it, and the same instructions, to a relative or friend they thought would be more likely to know the target person.

Many of the letters were never delivered, but of the ones that were it turned out that the average path length---the number of times the letters were forwarded---was about six. This result was taken to confirm previous observations (and speculations) that the typical distance between any two people in a social network is about ``six degrees of separation.''

This conclusion is surprising because most people expect social networks to be localized---people tend to live near their friends---and in a graph with local connections, path lengths tend to increase in proportion to geographical distance. For example, most of my friends live nearby, so I would guess that the average distance between nodes in a social network is about 50 miles. Wichita is about 1600 miles from Boston, so if Milgram's letters traversed typical links in the social network, they should have taken 32 hops, not six.

Watts and Strogatz

In 1998 Duncan Watts and Steven Strogatz published a paper in Nature, ``Collective dynamics of 'small-world' networks,'' that proposed an explanation for the small world phenomenon. You can download it from http://www.nature.com/nature/journal/v393/n6684/abs/393440a0.html.

Watts and Strogatz started with two kinds of graph that were well understood: random graphs and regular graphs. They looked at two properties of these graphs, clustering and path length.

Clustering is a measure of the ``cliquishness'' of the graph. In a graph, a clique is a subset of nodes that are all connected to each other; in a social network, a clique is a set of friends who all know each other. Watts and Strogatz defined a clustering coefficient that quantifies the likelihood that two nodes that are connected to the same node are also connected to each other.

Path length is a measure of the average distance between two nodes, which corresponds to the degrees of separation in a social network.

Their initial result is what you might expect: regular graphs have high clustering and high path lengths; random graphs with the same size tend to have low clustering and low path lengths. So neither of these is a good model of social networks, which seem to combine high clustering with short path lengths.

Their goal was to create a generative model of a social network. A generative model tries to explain a phenomenon by modeling the process that builds or leads to the phenomenon. In this case Watts and Strogatz proposed a process for building small-world graphs:

Barabasi and Albert

In 1999 Barabasi and Albert published a paper in Science, ``Emergence of Scaling in Random Networks,'' that characterizes the structure (also called ``topology'') of several real-world networks, including graphs that represent the interconnectivity of movie actors, world-wide web (WWW) pages, and elements in the electrical power grid in the western United States. You can download the paper from http://www.sciencemag.org/content/286/5439/509.

They measure the degree (number of connections) of each node and compute P(k), the probability that a vertex has degree k; then they plot P(k) versus k on a log-log scale. The tail of the plot fits a straight line, so they conclude that it obeys a power law; that is, as k gets large, P(k) is asymptotic to k^(-γ), where γ is a parameter that determines the rate of decay.

They also propose a model that generates random graphs with the same property. The essential features of the model, which distinguish it from the model and the Watts-Strogatz model, are:

Growth: Instead of starting with a fixed number of vertices, Barabasi and Albert start with a small graph and add vertices gradually.

Preferential attachment: When a new edge is created, it is more likely to connect to a vertex that already has a large number of edges. This ``rich get richer'' effect is characteristic of the growth patterns of some real-world networks.

Finally, they show that graphs generated by this model have a distribution of degrees that obeys a power law. Graphs that have this property are sometimes called scale-free networks; see http://en.wikipedia.org/wiki/Scale-free_network. That name can be confusing because it is the distribution of degrees that is scale-free, not the network.

In order to maximize confusion, distributions that obey a power law are sometimes called scaling distributions because they are invariant under a change of scale. That means that if you change the units the quantities are expressed in, the slope parameter, γ, doesn't change. You can read http://en.wikipedia.org/wiki/Power_law for the details, but it is not important for what we are doing here.

Explanatory models

We started the discussion of networks with Milgram's Small World Experiment, which shows that path lengths in social networks are surprisingly small; hence, ``six degrees of separation''. When we see something surprising, it is natural to ask ``Why?'' but sometimes it's not clear what kind of answer we are looking for.

One kind of answer is an explanatory model. The logical structure of an explanatory model is:

At its core, this is an argument by analogy, which says that if two things are similar in some ways, they are likely to be similar in other ways. Argument by analogy can be useful, and explanatory models can be satisfying, but they do not constitute a proof in the mathematical sense of the word.

Remember that all models leave out, or ``abstract away'' details that we think are unimportant. For any system there are many possible models that include or ignore different features. And there might be models that exhibit different behaviors, B, B' and B'', that are similar to O in different ways. In that case, which model explains O?

The small world phenomenon is an example: the Watts-Strogatz (WS) model and the (BA) model both exhibit small world behavior, but they offer different explanations:

As is often the case in young areas of science, the problem is not that we have no explanations, but too many.

Questions

[Think Complexity can be used as a textbook, so it includes exercises and topics for class discussion. Here are some ideas for discussion and further reading.]

Are these explanations compatible; that is, can they both be right? Which do you find more satisfying as an explanation, and why? Is there data you could collect, or an experiment you could perform, that would provide evidence in favor of one model over the other?

Choosing among competing models is the topic of Thomas Kuhn's essay, ``Objectivity, Value Judgment, and Theory Choice.'' You can download it here in PDF. What criteria does Kuhn propose for choosing among competing models? Do these criteria influence your opinion about the WS and BA models? Are there other criteria you think should be considered?

And we need a blurb. Think Complexity goes to press soon and we have a space on the back cover for a couple of endorsements. If you like the book and have something quotable to say about it, let me know. Thanks!

In Part One I outline the topics in Think Complexity and contrasted a classical physical model of planetary orbits with an example from complexity science: Schelling's model of racial segregation.

In Part Two I outline some of the ways complexity differs from classical science. In Part Three, I describe differences in the ways complex models are used, and their effects in engineering and (of all things) epistemology.

In this installment, I pull together discussions from two chapters: the Watts-Strogatz model of small world graphs, and the Barabasi-Albert model of scale free networks. But it all starts with Stanley Milgram.

Stanley Milgram

Stanley Milgram was an American social psychologist who conducted two of the most famous experiments in social science, the Milgram experiment, which studied people's obedience to authority (http://en.wikipedia.org/wiki/Milgram_experiment) and the Small World Experiment (http://en.wikipedia.org/wiki/Small_world_phenomenon), which studied the structure of social networks.

In the Small World Experiment, Milgram sent a package to several randomly-chosen people in Wichita, Kansas, with instructions asking them to forward an enclosed letter to a target person, identified by name and occupation, in Sharon, Massachusetts (which is the town near Boston where I grew up). The subjects were told that they could mail the letter directly to the target person only if they knew him personally; otherwise they were instructed to send it, and the same instructions, to a relative or friend they thought would be more likely to know the target person.

Many of the letters were never delivered, but of the ones that were it turned out that the average path length---the number of times the letters were forwarded---was about six. This result was taken to confirm previous observations (and speculations) that the typical distance between any two people in a social network is about ``six degrees of separation.''

This conclusion is surprising because most people expect social networks to be localized---people tend to live near their friends---and in a graph with local connections, path lengths tend to increase in proportion to geographical distance. For example, most of my friends live nearby, so I would guess that the average distance between nodes in a social network is about 50 miles. Wichita is about 1600 miles from Boston, so if Milgram's letters traversed typical links in the social network, they should have taken 32 hops, not six.

Watts and Strogatz

In 1998 Duncan Watts and Steven Strogatz published a paper in Nature, ``Collective dynamics of 'small-world' networks,'' that proposed an explanation for the small world phenomenon. You can download it from http://www.nature.com/nature/journal/v393/n6684/abs/393440a0.html.

Watts and Strogatz started with two kinds of graph that were well understood: random graphs and regular graphs. They looked at two properties of these graphs, clustering and path length.

Clustering is a measure of the ``cliquishness'' of the graph. In a graph, a clique is a subset of nodes that are all connected to each other; in a social network, a clique is a set of friends who all know each other. Watts and Strogatz defined a clustering coefficient that quantifies the likelihood that two nodes that are connected to the same node are also connected to each other.

Path length is a measure of the average distance between two nodes, which corresponds to the degrees of separation in a social network.

Their initial result is what you might expect: regular graphs have high clustering and high path lengths; random graphs with the same size tend to have low clustering and low path lengths. So neither of these is a good model of social networks, which seem to combine high clustering with short path lengths.

Their goal was to create a generative model of a social network. A generative model tries to explain a phenomenon by modeling the process that builds or leads to the phenomenon. In this case Watts and Strogatz proposed a process for building small-world graphs:

- Start with a regular graph with n nodes and degree k. Watts and Strogatz start with a ring lattice, which is a kind of regular graph. You could replicate their experiment or try instead a graph that is regular but not a ring lattice.

- Choose a subset of the edges in the graph and ``rewire'' them by replacing them with random edges. Again, you could replicate the procedure described in the paper or experiment with alternatives. The proportion of edges that are rewired is a parameter, p, that controls how random the graph is. With p=0, the graph is regular; with p=1 it is random.

Barabasi and Albert

In 1999 Barabasi and Albert published a paper in Science, ``Emergence of Scaling in Random Networks,'' that characterizes the structure (also called ``topology'') of several real-world networks, including graphs that represent the interconnectivity of movie actors, world-wide web (WWW) pages, and elements in the electrical power grid in the western United States. You can download the paper from http://www.sciencemag.org/content/286/5439/509.

They measure the degree (number of connections) of each node and compute P(k), the probability that a vertex has degree k; then they plot P(k) versus k on a log-log scale. The tail of the plot fits a straight line, so they conclude that it obeys a power law; that is, as k gets large, P(k) is asymptotic to k^(-γ), where γ is a parameter that determines the rate of decay.

They also propose a model that generates random graphs with the same property. The essential features of the model, which distinguish it from the model and the Watts-Strogatz model, are:

Growth: Instead of starting with a fixed number of vertices, Barabasi and Albert start with a small graph and add vertices gradually.

Preferential attachment: When a new edge is created, it is more likely to connect to a vertex that already has a large number of edges. This ``rich get richer'' effect is characteristic of the growth patterns of some real-world networks.

Finally, they show that graphs generated by this model have a distribution of degrees that obeys a power law. Graphs that have this property are sometimes called scale-free networks; see http://en.wikipedia.org/wiki/Scale-free_network. That name can be confusing because it is the distribution of degrees that is scale-free, not the network.

In order to maximize confusion, distributions that obey a power law are sometimes called scaling distributions because they are invariant under a change of scale. That means that if you change the units the quantities are expressed in, the slope parameter, γ, doesn't change. You can read http://en.wikipedia.org/wiki/Power_law for the details, but it is not important for what we are doing here.

Explanatory models

We started the discussion of networks with Milgram's Small World Experiment, which shows that path lengths in social networks are surprisingly small; hence, ``six degrees of separation''. When we see something surprising, it is natural to ask ``Why?'' but sometimes it's not clear what kind of answer we are looking for.

One kind of answer is an explanatory model. The logical structure of an explanatory model is:

- In a system, S, we see something observable, O, that warrants explanation.

- We construct a model, M, that is analogous to the system; that is, there is a correspondence between the elements of the model and the elements of the system.

- By simulation or mathematical derivation, we show that the model exhibits a behavior, B, that is analogous to O.

- We conclude that S exhibits O because S is similar to M, M exhibits B, and B is similar to O.

At its core, this is an argument by analogy, which says that if two things are similar in some ways, they are likely to be similar in other ways. Argument by analogy can be useful, and explanatory models can be satisfying, but they do not constitute a proof in the mathematical sense of the word.

Remember that all models leave out, or ``abstract away'' details that we think are unimportant. For any system there are many possible models that include or ignore different features. And there might be models that exhibit different behaviors, B, B' and B'', that are similar to O in different ways. In that case, which model explains O?

The small world phenomenon is an example: the Watts-Strogatz (WS) model and the (BA) model both exhibit small world behavior, but they offer different explanations:

- The WS model suggests that social networks are ``small'' because they include both strongly-connected clusters and ``weak ties'' that connect clusters.

- The BA model suggests that social networks are small because they include nodes with high degree that act as hubs, and that hubs grow, over time, due to preferential attachment.

As is often the case in young areas of science, the problem is not that we have no explanations, but too many.

Questions

[Think Complexity can be used as a textbook, so it includes exercises and topics for class discussion. Here are some ideas for discussion and further reading.]

Are these explanations compatible; that is, can they both be right? Which do you find more satisfying as an explanation, and why? Is there data you could collect, or an experiment you could perform, that would provide evidence in favor of one model over the other?

Choosing among competing models is the topic of Thomas Kuhn's essay, ``Objectivity, Value Judgment, and Theory Choice.'' You can download it here in PDF. What criteria does Kuhn propose for choosing among competing models? Do these criteria influence your opinion about the WS and BA models? Are there other criteria you think should be considered?

Monday, February 6, 2012

Think Complexity: Part Three

My new book, Think Complexity, will be published by O'Reilly Media in March. For people who can't stand to wait that long, I am publishing excerpts here. If you really can't wait, you can read the free version at thinkcomplex.com.

In Part One I outline the topics in Think Complexity and contrasted a classical physical model of planetary orbits with an example from complexity science: Schelling's model of racial segregation.

In Part Two I outline some of the ways complexity differs from classical science. In this installment, I describe differences in the ways complex models are used, and their effects in engineering and (of all things) epistemology.

A new kind of model

Complex models are often appropriate for different purposes and interpretations:

Predictive→explanatory: Schelling's model of segregation might shed light on a complex social phenomenon, but it is not useful for prediction. On the other hand, a simple model of celestial mechanics can predict solar eclipses, down to the second, years in the future.

Realism→instrumentalism: Classical models lend themselves to a realist interpretation; for example, most people accept that electrons are real things that exist. Instrumentalism is the view that models can be useful even if the entities they postulate don't exist. George Box wrote what might be the motto of instrumentalism: ``All models are wrong, but some are useful."

Reductionism→holism: Reductionism is the view that the behavior of a system can be explained by understanding its components. For example, the periodic table of the elements is a triumph of reductionism, because it explains the chemical behavior of elements with a simple model of the electrons in an atom. Holism is the view that some phenomena that appear at the system level do not exist at the level of components, and cannot be explained in component-level terms.

A new kind of engineering

I have been talking about complex systems in the context of science, but complexity is also a cause, and effect, of changes in engineering and the organization of social systems:

Centralized→decentralized: Centralized systems are conceptually simple and easier to analyze, but decentralized systems can be more robust. For example, in the World Wide Web clients send requests to centralized servers; if the servers are down, the service is unavailable. In peer-to-peer networks, every node is both a client and a server. To take down the service, you have to take down every node.

Isolation→interaction: In classical engineering, the complexity of large systems is managed by isolating components and minimizing interactions. This is still an important engineering principle; nevertheless, the availability of cheap computation makes it increasingly feasible to design systems with complex interactions between components.

One-to-many→many-to-many: In many communication systems, broadcast services are being augmented, and sometimes replaced, by services that allow users to communicate with each other and create, share, and modify content.

Top-down→bottom-up: In social, political and economic systems, many activities that would normally be centrally organized now operate as grassroots movements. Even armies, which are the canonical example of hierarchical structure, are moving toward devolved command and control.

Analysis→computation: In classical engineering, the space of feasible designs is limited by our capability for analysis. For example, designing the Eiffel Tower was possible because Gustave Eiffel developed novel analytic techniques, in particular for dealing with wind load. Now tools for computer-aided design and analysis make it possible to build almost anything that can be imagined. Frank Gehry's Guggenheim Museum Bilbao is my favorite example.

Design→search: Engineering is sometimes described as a search for solutions in a landscape of possible designs. Increasingly, the search process can be automated. For example, genetic algorithms explore large design spaces and discover solutions human engineers would not imagine (or like). The ultimate genetic algorithm, evolution, notoriously generates designs that violate the rules of human engineering.

A new kind of thinking

We are getting farther afield now, but the shifts I am postulating in the criteria of scientific modeling are related to 20th Century developments in logic and epistemology.

Aristotelian logic→many-valued logic: In traditional logic, any proposition is either true or false. This system lends itself to math-like proofs, but fails (in dramatic ways) for many real-world applications. Alternatives include many-valued logic, fuzzy logic, and other systems designed to handle indeterminacy, vagueness, and uncertainty. Bart Kosko discusses some of these systems in Fuzzy Thinking.

Frequentist probability →Bayesianism: Bayesian probability has been around for centuries, but was not widely used until recently, facilitated by the availability of cheap computation and the reluctant acceptance of subjectivity in probabilistic claims. Sharon Bertsch McGrayne presents this history in The Theory That Would Not Die.

Objective→ subjective: The Enlightenment, and philosophic modernism, are based on belief in objective truth; that is, truths that are independent of the people that hold them. 20th Century developments including quantum mechanics, Godel's Incompleteness Theorem, and Kuhn's study of the history of science called attention to seemingly unavoidable subjectivity in even ``hard sciences'' and mathematics. Rebecca Goldstein presents the historical context of Godel's proof in Incompleteness.

Physical law→theory→model: Some people distinguish between laws, theories, and models, but I think they are the same thing. People who use ``law'' are likely to believe that it is objectively true and immutable; people who use ``theory'' concede that it is subject to revision; and ``model'' concedes that it is based on simplification and approximation.

Some concepts that are called ``physical laws'' are really definitions; others are, in effect, the assertion that a model predicts or explains the behavior of a system particularly well. I discuss the nature of physical models later in Think Complexity.

Determinism→indeterminism: Determinism is the view that all events are caused, inevitably, by prior events. Forms of indeterminism include randomness, probabilistic causation, and fundamental uncertainty. We come back to this topic later in the book.

These trends are not universal or complete, but the center of opinion is shifting along these axes. As evidence, consider the reaction to Thomas Kuhn's The Structure of Scientific Revolutions, which was reviled when it was published and now considered almost uncontroversial.

These trends are both cause and effect of complexity science. For example, highly abstracted models are more acceptable now because of the diminished expectation that there should be unique correct model for every system. Conversely, developments in complex systems challenge determinism and the related concept of physical law.

The excerpts so far have been from Chapter 1 of Think Complexity. Future excerpts will go into some of these topics in more depth. In the meantime, you might be interested in this timeline of complexity science (from Wikipedia):

In Part One I outline the topics in Think Complexity and contrasted a classical physical model of planetary orbits with an example from complexity science: Schelling's model of racial segregation.

In Part Two I outline some of the ways complexity differs from classical science. In this installment, I describe differences in the ways complex models are used, and their effects in engineering and (of all things) epistemology.

A new kind of model

Complex models are often appropriate for different purposes and interpretations:

Predictive→explanatory: Schelling's model of segregation might shed light on a complex social phenomenon, but it is not useful for prediction. On the other hand, a simple model of celestial mechanics can predict solar eclipses, down to the second, years in the future.

Realism→instrumentalism: Classical models lend themselves to a realist interpretation; for example, most people accept that electrons are real things that exist. Instrumentalism is the view that models can be useful even if the entities they postulate don't exist. George Box wrote what might be the motto of instrumentalism: ``All models are wrong, but some are useful."

Reductionism→holism: Reductionism is the view that the behavior of a system can be explained by understanding its components. For example, the periodic table of the elements is a triumph of reductionism, because it explains the chemical behavior of elements with a simple model of the electrons in an atom. Holism is the view that some phenomena that appear at the system level do not exist at the level of components, and cannot be explained in component-level terms.

A new kind of engineering

I have been talking about complex systems in the context of science, but complexity is also a cause, and effect, of changes in engineering and the organization of social systems:

Centralized→decentralized: Centralized systems are conceptually simple and easier to analyze, but decentralized systems can be more robust. For example, in the World Wide Web clients send requests to centralized servers; if the servers are down, the service is unavailable. In peer-to-peer networks, every node is both a client and a server. To take down the service, you have to take down every node.

Isolation→interaction: In classical engineering, the complexity of large systems is managed by isolating components and minimizing interactions. This is still an important engineering principle; nevertheless, the availability of cheap computation makes it increasingly feasible to design systems with complex interactions between components.

One-to-many→many-to-many: In many communication systems, broadcast services are being augmented, and sometimes replaced, by services that allow users to communicate with each other and create, share, and modify content.

Top-down→bottom-up: In social, political and economic systems, many activities that would normally be centrally organized now operate as grassroots movements. Even armies, which are the canonical example of hierarchical structure, are moving toward devolved command and control.

Analysis→computation: In classical engineering, the space of feasible designs is limited by our capability for analysis. For example, designing the Eiffel Tower was possible because Gustave Eiffel developed novel analytic techniques, in particular for dealing with wind load. Now tools for computer-aided design and analysis make it possible to build almost anything that can be imagined. Frank Gehry's Guggenheim Museum Bilbao is my favorite example.

Design→search: Engineering is sometimes described as a search for solutions in a landscape of possible designs. Increasingly, the search process can be automated. For example, genetic algorithms explore large design spaces and discover solutions human engineers would not imagine (or like). The ultimate genetic algorithm, evolution, notoriously generates designs that violate the rules of human engineering.

A new kind of thinking

We are getting farther afield now, but the shifts I am postulating in the criteria of scientific modeling are related to 20th Century developments in logic and epistemology.

Aristotelian logic→many-valued logic: In traditional logic, any proposition is either true or false. This system lends itself to math-like proofs, but fails (in dramatic ways) for many real-world applications. Alternatives include many-valued logic, fuzzy logic, and other systems designed to handle indeterminacy, vagueness, and uncertainty. Bart Kosko discusses some of these systems in Fuzzy Thinking.

Frequentist probability →Bayesianism: Bayesian probability has been around for centuries, but was not widely used until recently, facilitated by the availability of cheap computation and the reluctant acceptance of subjectivity in probabilistic claims. Sharon Bertsch McGrayne presents this history in The Theory That Would Not Die.

Objective→ subjective: The Enlightenment, and philosophic modernism, are based on belief in objective truth; that is, truths that are independent of the people that hold them. 20th Century developments including quantum mechanics, Godel's Incompleteness Theorem, and Kuhn's study of the history of science called attention to seemingly unavoidable subjectivity in even ``hard sciences'' and mathematics. Rebecca Goldstein presents the historical context of Godel's proof in Incompleteness.

Physical law→theory→model: Some people distinguish between laws, theories, and models, but I think they are the same thing. People who use ``law'' are likely to believe that it is objectively true and immutable; people who use ``theory'' concede that it is subject to revision; and ``model'' concedes that it is based on simplification and approximation.

Some concepts that are called ``physical laws'' are really definitions; others are, in effect, the assertion that a model predicts or explains the behavior of a system particularly well. I discuss the nature of physical models later in Think Complexity.

Determinism→indeterminism: Determinism is the view that all events are caused, inevitably, by prior events. Forms of indeterminism include randomness, probabilistic causation, and fundamental uncertainty. We come back to this topic later in the book.

These trends are not universal or complete, but the center of opinion is shifting along these axes. As evidence, consider the reaction to Thomas Kuhn's The Structure of Scientific Revolutions, which was reviled when it was published and now considered almost uncontroversial.

These trends are both cause and effect of complexity science. For example, highly abstracted models are more acceptable now because of the diminished expectation that there should be unique correct model for every system. Conversely, developments in complex systems challenge determinism and the related concept of physical law.

The excerpts so far have been from Chapter 1 of Think Complexity. Future excerpts will go into some of these topics in more depth. In the meantime, you might be interested in this timeline of complexity science (from Wikipedia):