My collaborator asked me to compare two methods and explain their assumptions, properties, pros and cons:

1) Logistic regression: This is the standard method in the field. Researchers have designed a survey instrument that assigns each offender a score from -3 to 12. Using data from previous offenders, they estimate the parameters of a logistic regression model and generate a predicted probability of recidivism for each score.

2) Post test probabilities: An alternative of interest to my collaborator is the idea of treating survey scores the way some tests are used in medicine, with a threshold that distinguishes positive and negative results. Then for people who "test positive" we can compute the post-test probability of recidivism.

In addition to these two approaches, I considered three other models:

3) Independent estimates for each group: In the previous article, I cited the notion that "the probability of recidivism for an individual offender will be the same as the observed recidivism rate for the group to which he most closely belongs." (Harris and Hanson 2004). If we take this idea to an extreme, the estimate for each individual should be based only on observations of the same risk group. The logistic regression model is holistic in the sense that observations from each group influence the estimates for all groups. An individual who scores a 6, for example, might reasonably object if the inclusion of offenders from other risk classes has the effect of increasing the estimated scores for his own class. To evaluate this concern, I also considered a simple model where the risk in each group is estimated independently.

4) Logistic regression with a quadratic term: Simple logistic regression is based on the assumption that the scores are linear in the sense that each increment corresponds to the same increase in risk; for example, it assumes that the odds ratio between groups 1 and 2 is the same as the odds ratio between groups 9 and 10. To test that assumption, I ran a model that includes the square of the scores as a predictive variable. If risks are actually non-linear, this quadratic term might provide a better fit to the data. But it doesn't, so the linear assumption holds up, at least to this simple test.

5) Bayesian logistic regression: This is a natural extension of logistic regression that takes as inputs prior distributions for the parameters, and generates posterior distributions for the parameters and predictive distributions for the risks in each group. For this application, I didn't expect this approach to provide any great advantage over conventional logistic regression, but I think the Bayesian version is a powerful and surprisingly simple idea, so I used this project as an excuse to explore it.

Details of these methods are in this IPython notebook; I summarize the results below.

Logistic regression

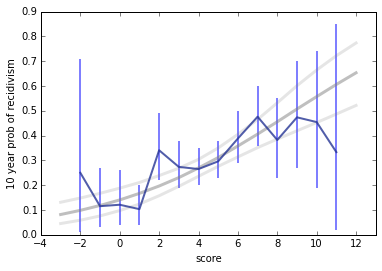

My collaborator provided a dataset with 10-year recidivism outcomes for 703 offenders. The definition of recidivism in this case is that the individual was charged with another offense within 10 years of release (but not necessarily convicted). The range of scores for offenders in the dataset is from -2 to 11.I estimated parameters using the StatsModels module. The following figure shows the estimated probability of recidivism for each score and the predictive 95% confidence interval.

A striking feature of this graph is the range of risks, from less than 10% to more than 60%. This range suggests that it is important to get this right, both for the individuals involved and for society.

[One note: the logistic regression model is linear when expressed in log-odds, so it is not a straight line when expressed in probabilities.]

Post test probabilities

In the context of medical testing, a "post-test probability/positive" (PTPP) is the answer to the question, "Given that a patient has tested positive for a condition of interest (COI), what is the probability that the patient actually has the condition, as opposed to a false positive test". The advantage of PTPP in medicine is that it addresses the question that is arguably most relevant to the patient.In the context of recidivism, if we want to treat risk scores as a binary test, we have to choose a threshold that splits the range into low and high risk groups. As an example, if I choose "6 or more" as the threshold, I can compute the test's sensitivity, specificity, and PTPP:

- With threshold 6, the sensitivity of the test is 42%, meaning that the test successfully identifies (or predicts) 42% of recidivists.

- The specificity is 76%, which is the fraction of non-recidivists who correctly test negative.

- The PTPP is 42%, meaning that 42% of the people who test positive will reoffend. [Note: it is only a coincidence in this case that sensitivity and PTPP are approximately equal.]

Now suppose we customize the test for each individual. So if someone scores 3, we would set the threshold to 3 and compute a PTPP and a credible interval. We could treat the result as the probability of recidivism for people who test 3 or higher. The following figure shows the results (in blue) superimposed on the results from logistic regression (in grey).

A few features are immediately apparent:

- At the low end of the range, PTPP is substantially higher than the risk estimated by logistic regression.

- At the high end, PTPP is lower.

- With this dataset, it's not possible to compute PTPP for the lowest and highest scores (as contrasted with logistic regression, which can extrapolate).

- For the lowest risk scores, the credible intervals on PTPP are a little smaller than the confidence intervals of logistic regression.

- For the high risk scores, the credible intervals are very large, due to the small amount of data.

So there are practical challenges in estimating PTPPs with a small dataset. There are also philosophical challenges. If the goal is to estimate the risk of recidivism for an individual, it's not clear that PTPP is the answer to the right question.

This way of using PTPP has the effect of putting each individual in a reference class with everyone who scored the same or higher. Someone who is subject to this test could reasonably object to being lumped with higher-risk offenders. And they might reasonably ask why it's not the other way around: why is the reference class "my risk or higher" and not "my risk or lower"?

I'm not sure there is a principled reason to that question. In general, we should prefer a smaller reference class, and one that does not systematically bias the estimates. Using PTPP (as proposed) doesn't do well by these criteria.

But logistic regression is vulnerable to the same objection: outcomes from high-risk offenders have some effect on the estimates for lower-risk groups. Is that fair?

I'm not sure there is a principled reason to that question. In general, we should prefer a smaller reference class, and one that does not systematically bias the estimates. Using PTPP (as proposed) doesn't do well by these criteria.

But logistic regression is vulnerable to the same objection: outcomes from high-risk offenders have some effect on the estimates for lower-risk groups. Is that fair?

Independent estimates

To answer that question, I also ran a simple model that estimates the risk for each score independently. I used a uniform distribution as a prior for each group, computed a Bayesian posterior distribution, and plotted the estimated risks and credible intervals. Here are the results, again superimposed on the logistic regression model.People who score 0, 1, or 8 or more would like this model. People who score -2, 2, or 7 would hate it. But given the size of the credible intervals, it's clear that we shouldn't take these variations too seriously.

With a bigger dataset, the credible intervals would be smaller, and we expect the results to be ordered so that groups with higher scores have higher rates of recidivism. But with this small dataset, we have some practical problems.

We could smooth the data by combining adjacent scores into risk ranges. But then we're faced again with the objection that this kind of lumping is unfair to people at the low end of each range, and dangerously generous to people at the high end.

I think logistic regression balances these conflicting criteria reasonably: by taking advantage of background information -- the assumption that higher scores correspond to higher risks -- it uses data efficiently, generating sensible estimates for all scores and confidence intervals that represent the precision of the estimates.

However, it is based on an assumption that might be too strong, that the relationship between log-odds and risk score is linear. If that's not true, the model might be unfair to some groups and too generous to others.

Linear regression with a quadratic term

To see whether the linear assumption is valid, we can run logistic regression again with two explanatory variables: score and the square of score. If the relationship is actually non-linear, this quadratic model might fit the data better.The estimated coefficient for the quadratic term is negative, so the line of best fit is a parabola with downward curvature. That's consistent with the shape of the independent estimates in the previous section. But the estimate for the quadratic parameter is not statistically significant (p=0.11), which suggests that it might be due to randomness.

Here is what the results look like graphically, again compared to the logistic regression model:

The estimates are lower for the lowest score groups, about the same in the middle, and lower again for the highest scores. At the high end, it seems unlikely that the risk of recidivism actually declines for the highest scores, and more likely that we are overfitting the data.

This model suggests that the linear logistic regression might overestimate risk for the lowest-scoring groups. It might be worth exploring this possibility with more data.

Bayesian logistic regression

One of the best features of logistic regression is that the results are in the form of a probability, which is useful for all kinds of analysis and especially for the kind of risk-benefit analysis that underlies parole decisions.But conventional logistic regression does not admit prior information about the parameters, and the result is not a posterior distribution, but just a point estimate and confidence interval. In general, confidence intervals are less useful as part of a decision making process. And if you have ever tried to explain a confidence interval to a general audience, you know they can be problematic.

So, mostly for my own interest, I applied Bayesian logistic regression to the same data. The Bayesian version of logistic regression is conceptually simple and easy to implement, in part because at the core of logistic regression there is a tight connection to Bayes's theorem. I wrote about this connection in a previous article.

My implementation, with explanatory comments, is in the IPython notebook; here are the results:

With a uniform prior for the parameters, the results are almost identical to the results from (conventional) logistic regression. At first glance it seems like the Bayesian version has no advantage, but there are a few reasons it might:

- For each score, we can generate a posterior distribution that represents not just a point estimate, but all possible estimates and their probabilities. If the posterior mean is 55%, for example, we might want to know the probability that the correct value is more than 65%, or less than 45%. The posterior distribution can answer this question; conventional logistic regression cannot.

- If we use estimates from the model as part of another analysis, we could carry the posterior distribution through the subsequent computation, producing a posterior predictive distribution for whatever results the analysis generates.

- If we have background information that guides the choice of the prior distributions, we could use that information to produce better estimates.

But in this example, I don't have a compelling reason to choose a different prior, and there is no obvious need for a posterior distribution: a point estimate is sufficient. So (although it hurts me to admit it) the Bayesian version of logistic regression has no practical advantage for this application.

Summary

Using logistic regression to estimate the risk of recidivism for each score group makes good use of the data and background information. Although the estimate for each group is influenced by outcomes in other groups, this influence is not unfair in any obvious way.

The alternatives I considered are problematic: PTPP is vulnerable to both practical and philosophical objections. Estimating risks for each group independently is impractical with a small dataset, but might work well with more data.

The quadratic logistic model provides no apparent benefit, and probably overfits the data. The Bayesian version of logistic regression is consistent with the conventional version, and has no obvious advantages for this application.

[UPDATE 17 November 2015] Sam Mason wrote to ask if I had considered using Gaussian process regression to model the data. I had not, but it strikes me as a reasonable choice if we think that the risk of recidivism varies smoothly with risk score, but not necessarily with the same risk ratio between successive scores (as assumed by logistic regression).

And then he kindly sent me this IPython notebook, where he demonstrates the technique. Here are two models he fit, using an exponential kernel (left) and a Matern kernel (right).

Here's what Sam has to say about the methods:

"I've done a very naive thing in a Gaussian process regression. I use the GPy toolbox (http://sheffieldml.github.io/GPy), which is a reasonably nice library for doing this sort of thing. GPs have n^2 complexity in the number of data points, so scaling beyond a few hundred points can be awkward but there are various sparse approximations (some included in GPy) should you want to play with much more data. The main thing to choose is the covariance function—i.e. how related are points should points be considered to be at a given distance. The GPML book by Rasmussen and Williams is a good reference if you want to know more.

"The generally recommended kernel if you don't know much about the data is a Matern kernel and this ends up looking a bit like your quadratic fit (a squared exponential kernel is also commonly used, but tends to be too strong). I'd agree with the GP model that the logistic regression is probably saying that those with high scores are too likely to recidivate. Another kernel that looked like a good fit is the Exponential kernel. This smooths the independent estimates a bit and says that the extreme rates (i.e. <=0 or >=7) are basically equal—which seems reasonable to me."

The shape of these fitted models is plausible to me, and consistent with the data.

At this point, we have probably squeezed out all the information we're going to get from 702 data points, but I would be interested to see if this shape appears in models of other datasets. If so, it might have practical consequences. For people in the highest risk categories, the predictions from the logistic regression model are substantially higher than the predictions from these GP models.

I'll see if I can get my hands on more data.

In terms of smoothing data by combining adjacent scores - couldn't you do a windowed approach to avoid the unfair lumping? Eg, take a group and its 2 neighbors? You could also do a non-uniform window and count the neighbors less than the group in question. Looks like you'd be able to trade independence from other groups for smoothing. This does have an issue for the end groups, but the smoothed curve might inform a reasonable extrapolation.

ReplyDeleteYes, I think that would be a reasonable option, and if the relationship were non-monotonic, it might be the best choice.

DeleteSam Mason sent the following comment, "I'm wondering whether the logistic regression is imposing too much structure on the data. It almost looks (from the Independent estimates plot) as though there could be a step function between groups 1 and 2 with little further change. I was therefore wondering whether you'd attempted any non-parametric analysis?

ReplyDeleteGaussian processes are great for this sort of application as they put less constraints on the shape of the function they're inferring while still allowing you to share information between groups."

Sounds like a good thing to investigate. Let me know if you find anything interesting.

DeleteIt might be a good idea to consider other types of statistical analysis used in the field. For example, survival analysis, to model the time until a delinquent returns to prison.

ReplyDelete